It's been about a week since Avengers 2: Age of Ultron, came out, and unsurprisingly it is still dominating the box office. The story revolves around the evil Artificial Intelligence Ultron, a robot created by Tony Stark to be a force for good, unfortunately Ultron turns on his creators and in classic Sci-Fi robot fashion tries to kill his maker as well as committing various other terrible things. The question I wanted to know is could an intelligent machine like Ultron ever be created, could Ultron happen?

It's been about a week since Avengers 2: Age of Ultron, came out, and unsurprisingly it is still dominating the box office. The story revolves around the evil Artificial Intelligence Ultron, a robot created by Tony Stark to be a force for good, unfortunately Ultron turns on his creators and in classic Sci-Fi robot fashion tries to kill his maker as well as committing various other terrible things. The question I wanted to know is could an intelligent machine like Ultron ever be created, could Ultron happen?

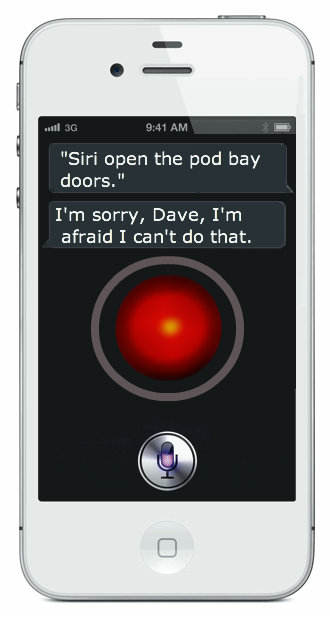

Well, what is Ultron? Ultron is an A.I., a thinking machine. A.I.'s already exist though, they're used in manufacturing, hospitals, phones, and even toys, yeah, there's a little Ultron inside your iPhone. The difference between Siri and the Avengers Kill-bot is that while Ultron is a strong A.I., Siri and other modern A.I.'s are weak. A weak A.I. is a non-sentient computer intelligence, so basically they perform one narrow task like how Siri can "understand" what you're saying and then send that information to servers/it's brain to then retrieve what you wanted.

Ultron by comparison is a strong A.I., Ultron can feel emotions, plan ahead, make jokes, perform a variety of task, and kill people while offering witty come-backs. A strong A.I., or A.G.I. (strong general intelligence) is the endgame of all A.I. researchers and futurists. A machine capable of reason, judgement, general knowledge, commonsense, planning, and learning. There are several ways of figuring out if you have a strong A.I. on your hands using a variety of tests, I swear, I'm not making these up, the coffee test where the A.I. must figure out by itself how to make coffee, the robot college student where it enrolls in a university and performs like a normal teenager, if it starts calling itself Ultron and talks like David Spader, and the most famous one of all, the Turing test.

Ultron by comparison is a strong A.I., Ultron can feel emotions, plan ahead, make jokes, perform a variety of task, and kill people while offering witty come-backs. A strong A.I., or A.G.I. (strong general intelligence) is the endgame of all A.I. researchers and futurists. A machine capable of reason, judgement, general knowledge, commonsense, planning, and learning. There are several ways of figuring out if you have a strong A.I. on your hands using a variety of tests, I swear, I'm not making these up, the coffee test where the A.I. must figure out by itself how to make coffee, the robot college student where it enrolls in a university and performs like a normal teenager, if it starts calling itself Ultron and talks like David Spader, and the most famous one of all, the Turing test.

So now that we know what Ultron is, a strong A.I. that exhibits human intelligence and behaviors, the question is could an A.I. turn evil and try to kill us. The entire history of Sci-Fi would say YES, it would come online and kill/enslave/consume us all, cough, cough, Terminator, cough, cough. Not only do Sci-Fi writers think A.I. could be the end of us all, so do people like Stephen Hawking and Bill Gates. But you ask, why couldn't we program it so I wouldn't want to kill us, unfortunately an A.I. could simply find a way to change it's programming, or create another version of itself lacking the bits of code keeping it from becoming an evil kill-bot.

So is there any way of having a benevolent A.I. is there anything we can do to avoid Ultron but have an A.I., well no, because there is no good. Good, benevolence, evil, malevolence, they're all human constructs, constructs an intelligent machine might not have. A machine might decide that the best way to never lose is to never play, it could believe that to keep people safe means to control every aspect of their being, after all, that is technically true.

So is there any way of having a benevolent A.I. is there anything we can do to avoid Ultron but have an A.I., well no, because there is no good. Good, benevolence, evil, malevolence, they're all human constructs, constructs an intelligent machine might not have. A machine might decide that the best way to never lose is to never play, it could believe that to keep people safe means to control every aspect of their being, after all, that is technically true.

So if an A.I. could always turn evil and decide to control of kill us, why build one in the first place. The fact is that for every chance an A.I. might become evil, there is a chance it may stay good. Children have the potential to be heroic, or horrific, an A.I. would be the child not birthed by a few people, but by humanity as a whole, an offspring of science and technology with the capacity to send us into an age of tremendous peace and prosperity, or terror and tyranny. Today A.I. brings numerous benefits, from keeping us connected to lengthening our lives, but the future is unknown, holding many dangers as well as prizes, whether we will enter a great age of learning and wonder or suffer extinction by an Ultron-like destroyer depends on not only the individual's choices, but humanity's choices as a whole.

So is there any way of having a benevolent A.I. is there anything we can do to avoid Ultron but have an A.I., well no, because there is no good. Good, benevolence, evil, malevolence, they're all human constructs, constructs an intelligent machine might not have. A machine might decide that the best way to never lose is to never play, it could believe that to keep people safe means to control every aspect of their being, after all, that is technically true.

So is there any way of having a benevolent A.I. is there anything we can do to avoid Ultron but have an A.I., well no, because there is no good. Good, benevolence, evil, malevolence, they're all human constructs, constructs an intelligent machine might not have. A machine might decide that the best way to never lose is to never play, it could believe that to keep people safe means to control every aspect of their being, after all, that is technically true.So if an A.I. could always turn evil and decide to control of kill us, why build one in the first place. The fact is that for every chance an A.I. might become evil, there is a chance it may stay good. Children have the potential to be heroic, or horrific, an A.I. would be the child not birthed by a few people, but by humanity as a whole, an offspring of science and technology with the capacity to send us into an age of tremendous peace and prosperity, or terror and tyranny. Today A.I. brings numerous benefits, from keeping us connected to lengthening our lives, but the future is unknown, holding many dangers as well as prizes, whether we will enter a great age of learning and wonder or suffer extinction by an Ultron-like destroyer depends on not only the individual's choices, but humanity's choices as a whole.

Think what you will,

Taran B.

0 comments:

Post a Comment